My goal here is to emphasize the power of first principles thinking by showing how easy complicated things become, when broken down.

Background

So yes, I had the audacity to take a compilers class in my first semester of college. Though my professor warned me not to, I got curious with the title "Structure and Interpretation of Computer Programs. (SICP)" and I did it anyway. We started off with Scheme, a dialect of Lisp and slowly moved forward with the famous wizard textbook titled—”Structure and Interpretation of Computer Programs”. I was new to functional programming-style and had a bit of a hard time differentiating programming styles of Scheme from various languages I interacted with before. I kept forgetting syntaxes & basic rules. So, to battle this issue, I started doing what I do best: documenting my learnings.

I had two reasons behind being this “documentation geek”:

- Ideas are clearer when written down.

- Sharing knowledge and resources are core to my heart.

So I started writing down some basic building blocks of Scheme on a GitHub repo under the name: educational-scheme-library. What I did here was documenting down how to write the bare basics e.g., arithmetics, if-else, cond, input, & list with examples, so that anyone starting with Scheme can use this as reference amid all other messy textbook lines or website showoffs. Additionally, I’ve noticed the scarcity of problem solving opportunities with Lisp like we can do with Online Judges (if anyone knows any OJ that accepts Lisp, please share). So, I’ve solved some basic brewcrowd (formerly URI Online Judge) problems myself and put it out as reference. Note that, these are the extreme basic tools for Scheme and the repo has a lot to work on. But one good thing about creating your own documentation of learning is that you can go back to coding months later and still can catch-up fast without skimming through long texts or 4hr videos—at least works for me.

Now that was the easy part, the hard part came at the end of the tunnel (I mean the course), when you see the course project is building a compiler from scratch. In most cases, compilers are like a totally separate course where you learn about it throughout the whole course and then go off to building one. But having Compilers included in the SICP course caught me off guard.

Now those of you who know about compilers might know the Dragon Book (how could you not?). Well, I didn’t wanna go through another ancient text book at the end of the term, demystifying each of the sentences. So, I resorted to some online courses and articles that were somewhat helpful in describing how a compiler works. But when you have a lot of stuff thrown at your face at the same time, you have a hard time seeing each of its phases. I was hopeless at that point.

Coincidentally enough, around that time, I discovered this video on YouTube, titled: “Most Powerful Way to Think: First Principles.”

First Principles

The smallest category in any domain is called first principle. Let’s say there’s a mango tree and you could just see the mango and the tree and leave it to be. That’d be an understanding of the objects, but not the deepest one. But someone thinking from the first principles will see the mango connected to a branch and the branch to the tree and the tree to its roots and concluding the roots as the most vital part of the tree. Thinking like this makes the thinker organize the free floating knowledge into a categorized one in relation to each other and that’s how great thinkers like Aristotle used to think. An idea or knowledge that’s built from true first principles are the most powerful ones. (more)

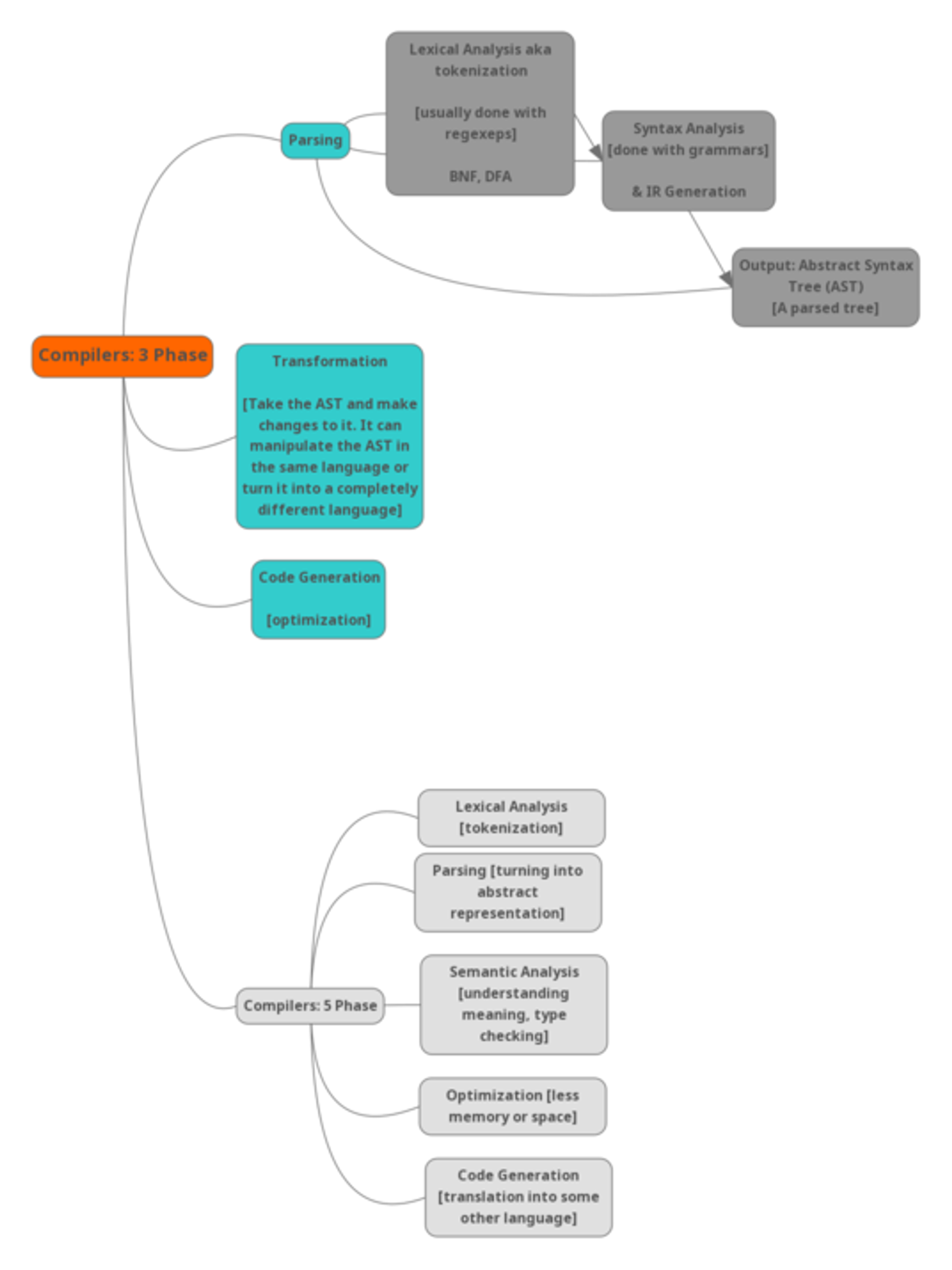

I was totally amazed how clearer I started thinking when I broke down the abstractions into different layers whenever I started learning anything new. So in front of me was this giant concept: Compilers, that’s almost every CS student’s nightmare and I had to finish that within two weeks. So I picked up an online diagramming tool and started breaking down a compiler to its fundamental state. The end result sort of looked like this →

Compilers

Now let’s look at how first principles thinking helped me understand Compilers. Like the example before about mango trees, I started thinking how can I break down compilers to its bare phases. After some research, I learned that compilers can be broken down to 3 or 5 phase processes, depending on what the reader is concerned about learning. I’m going to talk about the 5 phase process for simplicity.

5 phases of a compiler

- Lexical Analysis

- Parsing

- Semantic Analysis

- Optimization

- Code Generation

(Click ▶️ below to expand each one)

Lexical Analysis

Let’s consider this example of a sentence:

“If you’re reading this, you’re awesome.”

To process the meaning of this sentence, we need to understand what the individual words like “If”, “reading”, etc., mean. We humans understand this sentence without any effort but if you think about it, you’re able to do that because you learned how to understand the meaning of each piece and how to correlate one word to another.

If I give you a sentence like:

“ify ou’rere adingt his, y ou’reawe some”

You’ll have a much harder time to comprehend. But it is the same sentence as above, only I’ve moved the spaces in between.

For a computer to understand a sentence, you have to break it down to smaller components that have meanings of its own.

That’s what the lexical analysis process does. It breaks down sentences or codes into smaller words, which in compilers world, known as “tokens”

Parsing

Now once you’ve understood individual words through Lexical Analysis, the next goal is to understand the structure of a sentence to use those words and make sense out of it. This is where Parsing takes place. Usually we use a tree (diagram) to represent the words in relation to its bigger branch.

You are awesome

⬇️ ⬇️ ⬇️

Pronoun Verb Adjective

Now all together form a sentence.

More Explanation

Sentence → Subject + Verb + Object → Subject (You) + Verb (are) + Object (Adjective)

Note how all of these sub-concepts can be broken down using first principles thinking as well.

Semantic Analysis

Now semantic analysis comes to play when there’s inconsistency in the syntax. Semantic Analysis is done to understand the meaning in a context. Let’s see a sentence.

Mike said Tom left his wallet at home

Now when you read this sentence, even when you understand the words and meaning through the previous steps, you can’t be sure who this “his” refers to. It could be either Mike or Tom.

In coding, this example can be analogous to assigning two separate values to the same variable and then using that value in a separate function. Different programming languages have very different ways of interpreting scopes and that’s where semantic analysis takes place.

Note that Compilers can do very limited semantic analysis. Most of the time it can catch, but doesn’t know what to do about it. So when you wonder why your IDE doesn’t give better error messages about bugs, this is the reason.

Optimization

Optimization is more like editing your sentences so that you don’t use redundant words. In coding it refers to memory and speed optimization. Look at the sentence below:

But a little bit like editing

If I want to shorten this sentence, I can just say: “But akin to editing” . It basically means the same thing but uses fewer words.

Code Generation

Code generation is the process of translating our code into a code of another language. It is similar to human language translation, where we might translate something from Spanish to English or vice versa.

Usually we take our code in the coding language we’re writing and translate it to assembly language.

At a glance this is what the compiler does to interpret this sentence of mine: “I happen to have a lot of friends who I can share ideas with.”

First lexical analysis understands each word separately as a token. Then through parsing, we represent the tokens as trees. Then we understand the meaning of the sentence as a whole, in the context of a paragraph or text. After that we use optimization to reduce words like “happen to”, “a lot of”, and “who I can” and replace it with appropriate words.

We can get a sentence like: “I have many friends to share ideas with.”

Then we translate it to the appropriate language of our need using code generation. And that’s how pretty much all compilers work.

Things have become way easier to follow through after I’ve gone through this thinking process. I started learning about these different components like lexers, parsers, tokenization, etc. separately through a variety of sources. I ended up with a “gudenough” understanding of the compiler and finished the project with a compiler written in Java for a pascal-like language. Now I’m not saying I know everything about the compiler after this, but I had a decent (or descent? sorry joke had to be made) understanding of compilers going through the process. And because I documented everything in a GitHub repo for the aforementioned two reasons, I can go back to it at any time and pick it up from there. You’ll find the repo under this hood iwillcompileyou. where I have done my share of resource gathering if you want it for a class or hobby.

There you go. Your boy has gone through the Compilers class, what’s bigger to lose?! On a serious note, I don’t recommend any freshman to do this. But going through the process of applying first principles thinking and learning compilers had a sweet spot in the middle and somehow I started to love this part of CS unlike many. I’d be happy to continue this journey further in some time.

But until then, keep thinking from first principles. Anyone interested in learning more about this can check this awesome blog: